Test your website ideas before trusting your gut

It’s easy to believe you know what works on a website. After all, you’ve read the blogs, watched the case studies, maybe even bought into a few “conversion hacks.”

But here’s the problem: What works in one case doesn’t always translate to yours.

This is something I’ve seen repeatedly when teams implement a “proven” feature, only to realize later it either made no difference or even hurt their results.

Let’s look at why that happens, and how to approach changes more thoughtfully.

The danger of assuming what works

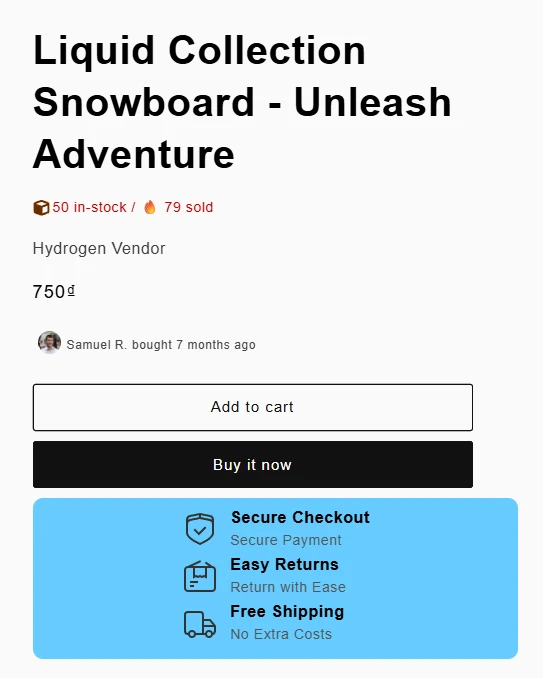

Imagine you’re running an e-commerce site and hear that adding a “Someone just purchased X” pop-up increases conversions. Sounds promising, right? Social proof is powerful. It helps people feel safe making a purchase when they see others doing it too.

But here’s what might happen instead:

The widget clutters your layout (easy to happen when making the wrong app choices), distracting users from the main call to action.

It overlaps a key button (like Checkout), leading to frustration.

Visitors see repetitive, clearly fake-looking notifications, and start doubting your credibility.

Each of these small details can undo any potential benefit. So while the idea may sound convincing in theory, your specific audience, timing, or design context could turn it into a problem instead of a win.

When “best practices” aren’t always best for you

You’ll often hear general rules like “shorter forms convert better” or “add testimonials near your pricing.” These are good starting points, but they’re not guarantees.

What really matters is how those rules fit into your actual user experience. A few examples:

Fewer fields may hurt quality If you remove too many form fields, you might get more signups but also more unqualified leads.

Testimonials can create doubt If they look too polished or don’t feel authentic, people might assume they’re fake.

Urgency popups can backfire A “Sale ends in 10 minutes” timer might increase pressure but can also increase cart abandonment if users feel manipulated.

Testing doesn’t have to mean full A/B testing software or complex analytics setups. Sometimes it just means changing one thing at a time and watching what happens for a while.

What to do if you can’t run proper tests

If your website doesn’t get enough traffic for statistically valid A/B tests, you can still make data-informed decisions during your design phases.

Here are a few ways:

Use simple observation. Change one thing at a time and track basic metrics like conversion rate, bounce rate, or time on page.

Ask users directly. Use short on-site surveys or feedback widgets. Even a few responses can show patterns.

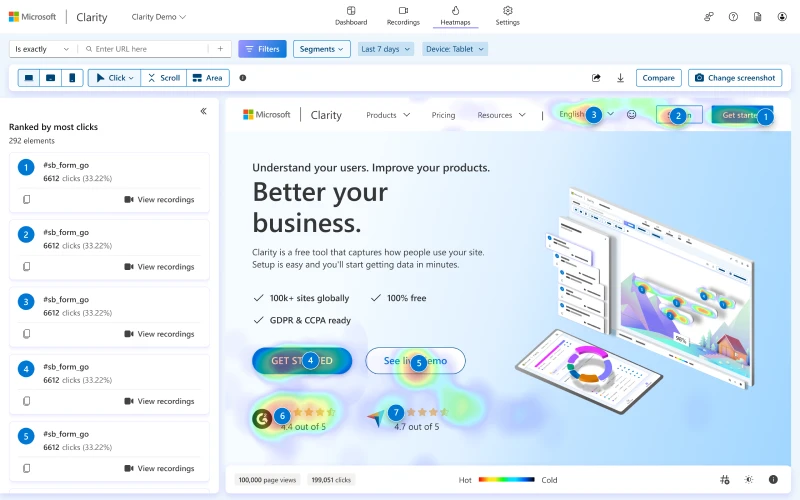

Watch recordings. Tools like Hotjar or Microsoft Clarity let you see how users actually interact with your site.

The key is curiosity. Treat each adjustment as a small experiment, not a sure win.

Being less certain makes you more effective

It’s easy to fall in love with ideas that sound smart. But websites are complex, and user behavior isn’t always logical. The best approach is to stay flexible and assume nothing until it’s tested.

Even if you can’t test formally, act like you’re running small experiments. Question every “proven” trick, make changes carefully, and observe what actually happens.

That mindset alone will put you ahead of most teams.